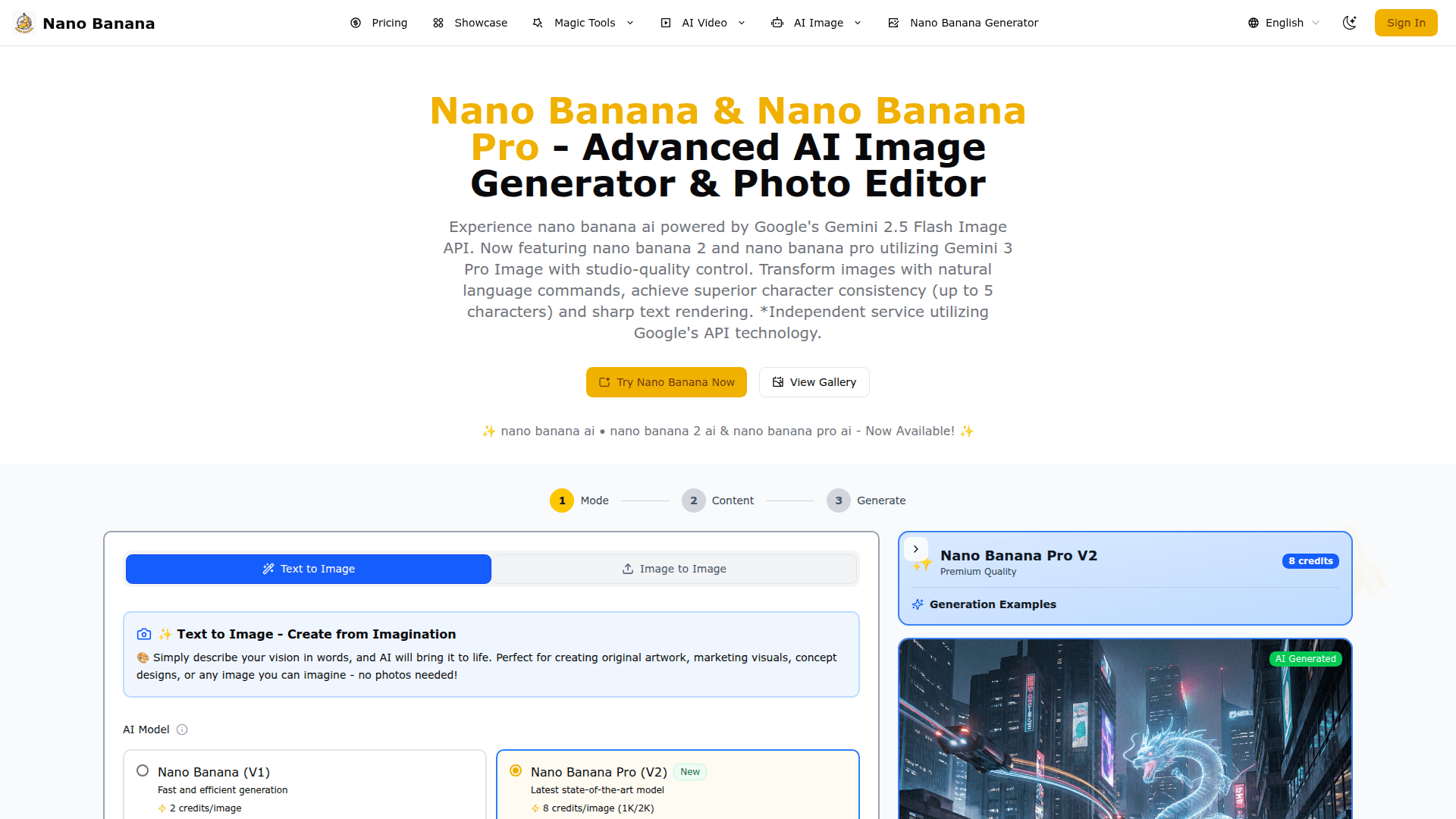

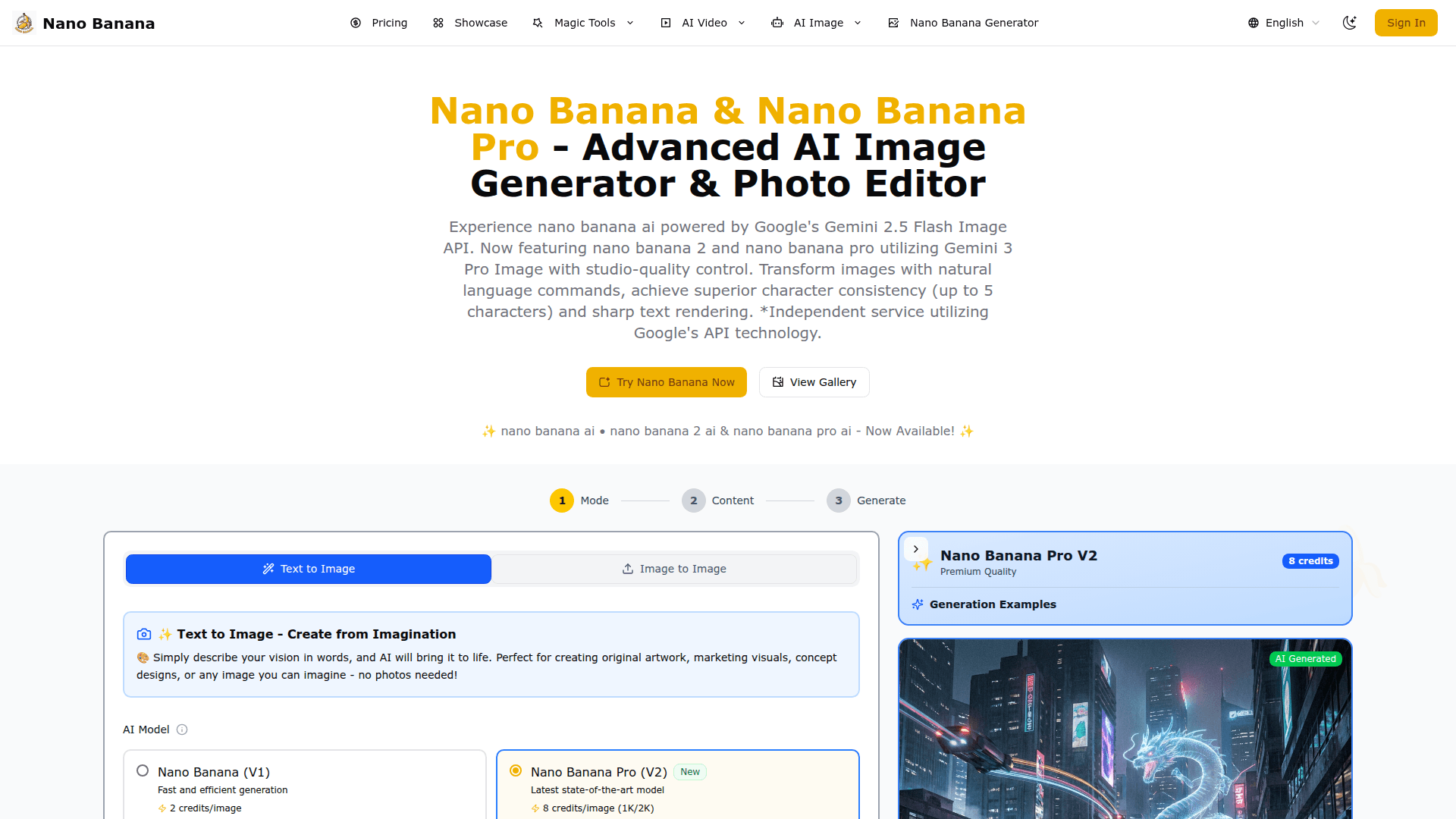

Nano Banana: Someone Finally Made Gemini's Image AI Actually Usable

Google's Gemini image generation has been criminally underrated. Nano Banana packages it into a product that solves real problems other AI image tools have ignored for years.

Google's Image AI Has Been Hiding in Plain Sight

For the past two years, every conversation about AI image generation has centered on Midjourney and Stable Diffusion. But here's something odd: in technical benchmarks, Google's Gemini image capabilities consistently rank near the top—yet almost nobody uses it for actual work.

The reason is simple. Google buried these capabilities behind API documentation and developer tools. Regular users never got access.

Nano Banana changes that. It wraps Gemini 2.5 Flash Image and the new Gemini 3 Pro Image into a product you can actually use without reading a single line of documentation.

The Text Rendering Problem Nobody Talks About

Ask Midjourney or DALL-E to generate a sale banner with "50% OFF" and you'll probably get "5O% 0FF" or something worse. This isn't a prompt engineering issue—these models were never trained to treat text as something that needs to be accurate.

I've lost count of how many hours I've spent in Photoshop fixing AI-generated text that looked like it was written by someone having a stroke.

Nano Banana specifically addresses this with what they call "sharp and legible text rendering." I tested it on product packaging, event posters, and t-shirt designs. The text came out clean and readable every time. Not perfect—occasionally a letter would be slightly off—but leagues ahead of what Midjourney produces.

For anyone doing e-commerce or marketing work, this alone might justify the switch.

Character Consistency Actually Works

Here's another pain point that's been driving creators insane. You generate a perfect brand mascot or spokesperson, love everything about them, and then discover you can't recreate that exact person in a different pose. The next generation gives you someone with a different face, different hair, sometimes even different skin tone.

Nano Banana Pro claims to maintain consistency across up to five characters. I was skeptical—every AI image tool claims some version of this—but it actually holds up. The same virtual person can appear in multiple scenes looking like the same person, not a slightly different cousin each time.

This matters for brand campaigns, visual storytelling, social media series, or anything where you need continuity across images.

How the Three Versions Compare

Nano Banana runs on two different Gemini models. The original V1 uses Gemini 2.5 Flash—fast and cheap at 2 credits per image. Nano Banana 2 and the Pro version use the newer Gemini 3 Pro Image, which costs more (8 credits for standard resolution, 16 for 4K) but delivers noticeably better results.

The Pro version also runs slower, about 2-5x compared to V1. That's the tradeoff for quality.

New users get 5 free credits, enough to generate a couple of test images and see if it fits your workflow. Pricing runs $9.99/month for 100 credits at the low end, up to $79.99/month for 1600 credits. Do the math and V1 images cost about $0.06 each while Pro 4K images run around $0.50 each.

Not cheap, but not outrageous either.

Why Gemini Has a Technical Edge

Here's the part that matters if you care about what's happening under the hood.

Most image generation models work by connecting a text encoder to an image generator. They're separate systems stitched together. Gemini is different—it's natively multimodal. Text and image understanding happen in the same model.

The practical benefit is what Google calls "world knowledge integration." When you ask for a 1920s Shanghai alleyway, Midjourney pattern-matches against its training images. Gemini actually understands what the 1920s means, what Shanghai architecture looked like, what people wore, how the light would fall.

This difference shows up most clearly in natural language editing. You can describe changes conversationally—"make the lighting warmer" or "add rain to the scene"—and the model understands your intent instead of guessing at keywords.

One Detail Worth Knowing

Generated images come with SynthID watermarking—Google's invisible tagging system for AI-generated content. You won't see it, but detection tools can identify it. Given where AI content regulation is heading, this is probably a responsible choice rather than a limitation.

Where It Fits Against the Competition

Midjourney still wins on pure aesthetics. If you want art that looks like art, that's still your best bet. Stable Diffusion offers more control if you're willing to learn the tooling and have the hardware.

Nano Banana occupies a different niche: commercial practicality. Clean text, consistent characters, resolutions up to 4K, natural language editing. It's built for marketing teams and content creators who need images that work, not images that impress.

The comparison to Flux Kontext is interesting—Nano Banana's FAQ directly claims superiority on character consistency and scene preservation, citing LMArena rankings as evidence. I haven't done side-by-side testing, but the confidence is notable.

Bottom Line

If you're doing e-commerce, social media content, or marketing materials, Nano Banana solves problems you've probably been working around for years. The text rendering and character consistency features alone address real frustrations.

If you're doing concept art or pure creative work, Midjourney probably still makes more sense.

Five free credits is enough to find out which camp you're in.

January 2026